Observing Optimizer Behavior over Search Surfaces

Visualizing the training process over various optimization surfaces. – Nov 12, 2024

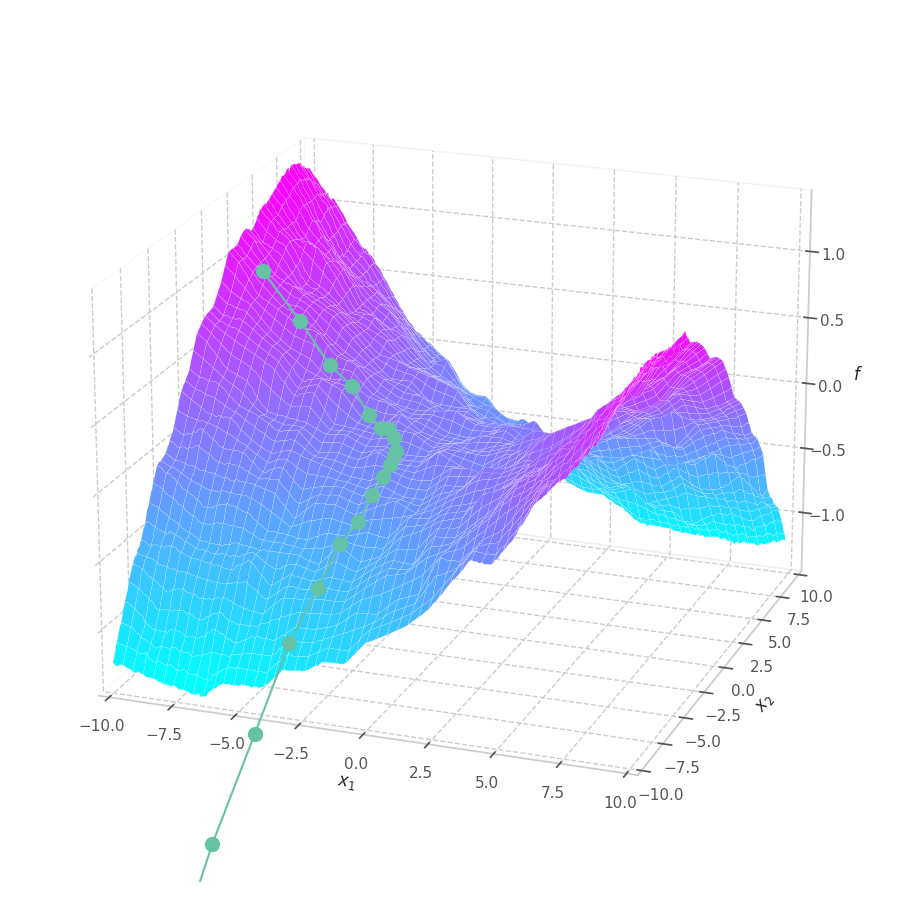

Training a Machine Learning model consists of traverse an optimization surface, seeking the best solution: the configuration of parameters θ associated to the lowest error or best fit. It would be interesting to have a have a visual representation of this process, in which problems such as local minima, noise and overshooting are explicit and better understood.

ML Optimization VisualizationActivation, Cross-Entropy and Logits

Discussion around the activation loss functions commonly used in Machine Learning problems. – Aug 30, 2021

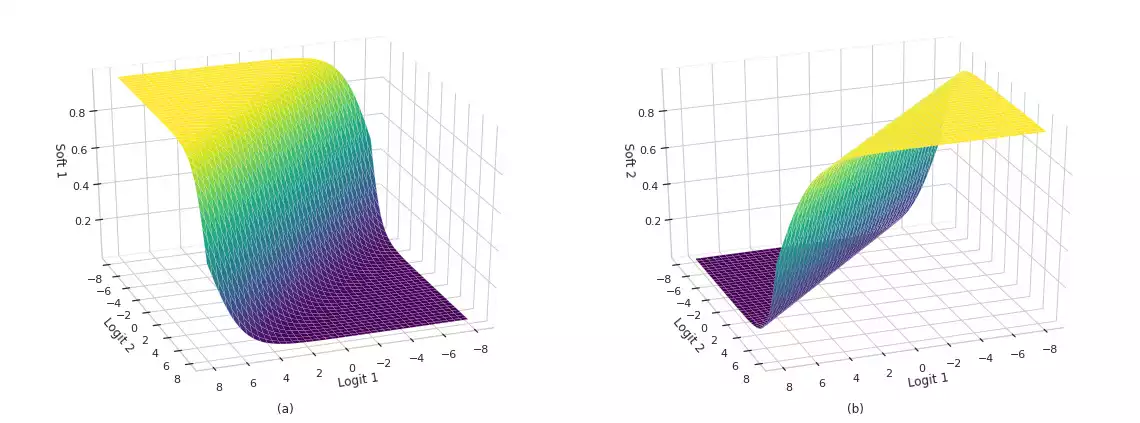

Activation and loss functions are paramount components employed in the training of Machine Learning networks. In the vein of classification problems, studies have focused on developing and analyzing functions capable of estimating posterior probability variables (class and label probabilities) with some degree of numerical stability. In this post, we present the intuition behind these functions, as well as their interesting properties and limitations. Finally, we also describe efficient implementations using popular numerical libraries such as TensorFlow.

ML Classification Multi-label Linear OptimizationK-Means and Hierarchical Clustering

Efficient clustering algorithms implementations in TensorFlow and NumPy. – Jun 11, 2021

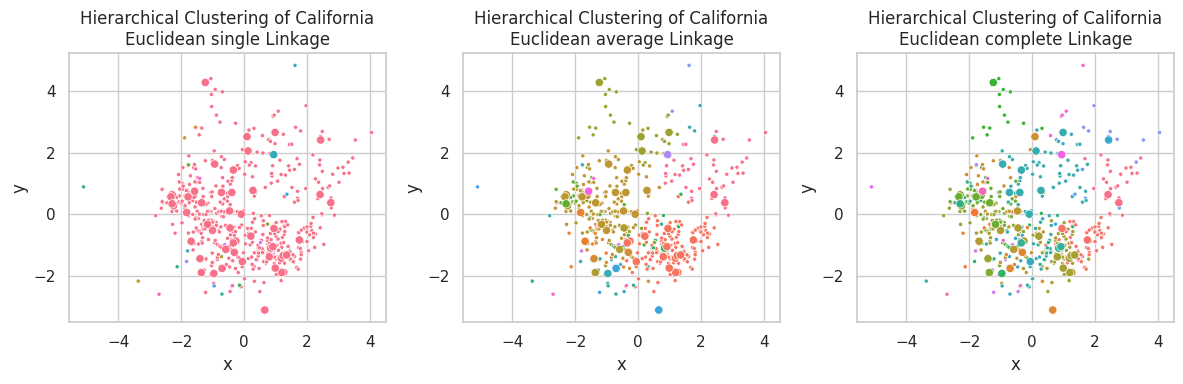

Here, our goal is to apply unsupervised learning methods to solve clustering and dimensionality reduction in two distinct task. We implemented the K-Means and Hierarchical Clustering algorithms (and their evaluation metrics) from the ground up. Results are presented over three distinct datasets, including a bonus color quantization example.

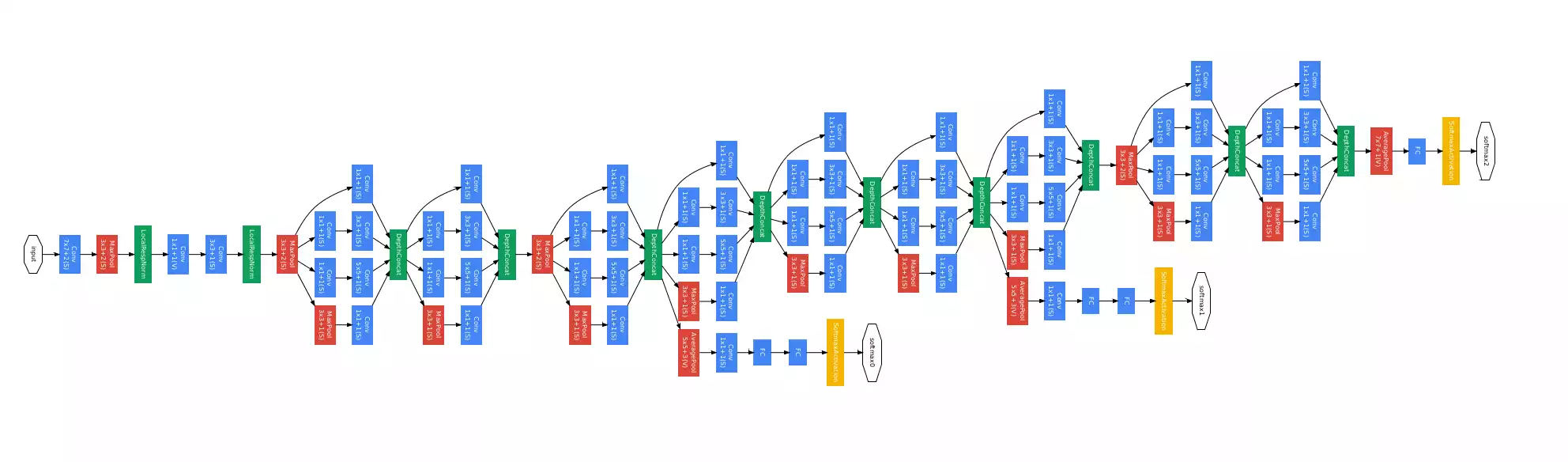

ML Clustering TensorFlowClass Activation Mapping

Explaining AI with Grad-CAM. – Mar 23, 2021

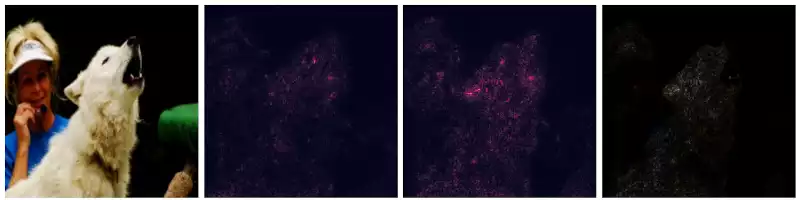

Gradient-based methods are a great way to understand a networks' output, but cannot be used to discriminate classes, as they focus on low-level features of the input space. An alternative to this are CAM-based visualization methods.

ML AI ExplainingExplaining Machine Learning Models

Explainability using tree decision visualization, weight composition, and gradient-based saliency maps. – Jan 15, 2021

Estimators that are hard to explain are also hard to trust, jeopardizing the adoption of these models by a broader audience. Research on explaining CNNs has gained traction in the past years. I'll show two related methods this post.

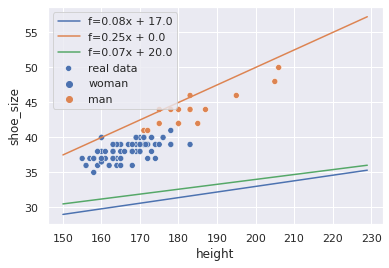

ML AI Explaining Scikit-Learn TensorFlowCaso de estudo sobre regressão linear (in Portuguese)

Uma descrição detalhadas dos princípios da regressão linear, a partir de um caso prático. – Sep 30, 2020

ML não é uma coisa incompreensível. Não funciona pra todos os casos e não vai necessariamente te oferecer uma solução melhor do que uma implementação bem pensada e deterministica num passe de mágica. Tem as suas utilidades. Podemos resolver problemas de uma forma relativamente simples em ambientes dinâmicos e confusos, ou onde não podemos fazer grandes suposições em relação ao seu funcionamento. Esse notebook explica um pouco sobre modelos lineares: como eles funcionam e como utilizá-los de forma eficiente. Espero que seja instrutivo e clareie um pouco do tópico pra todo mundo.

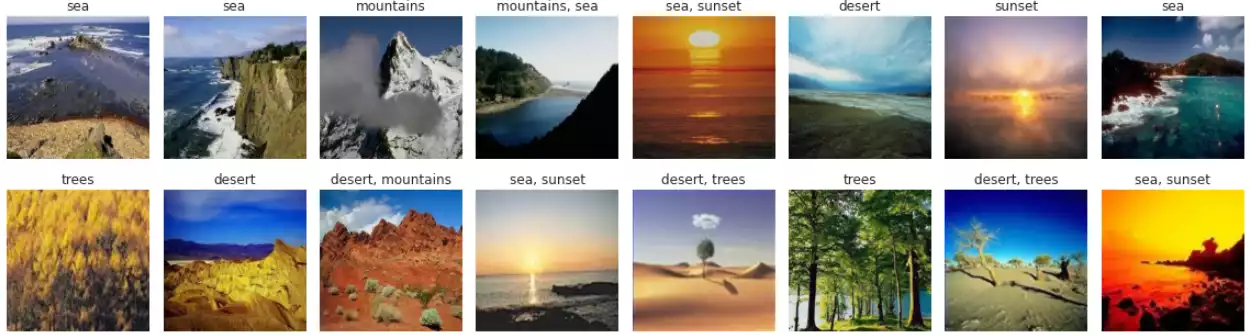

ML Regression Linear Optimization PortugueseMultilabel Learning Problems

Dealing with ML classification problems that deal where samples aren't mutually disjointed. – Oct 26, 2017

In classic classification with networks, samples belong to a single class. We usually code this relationship using one-hot encoding: a label i is transformed into a vector [0, 0, ... 1, ..., 0, 0], where the number 1 is located in the i-th position in the target vector.

ML Classification Multi-labelExperimenting with Sacred

The basics on experimenting with sacred: executing, logging and reproducing – Oct 2, 2017

When studying machine learning, you'll often see yourself training multiple models, testing different features or tweaking parameters. While a tab sheet might help you, it's not perfect for keeping logs, results and code. Believe me, I tried. An alternative is Sacred. This package allows you to execute multiple experiments and record everything in an organized fashion.

DevOps